You can use a subflow as the first stage in a dataflow to read data from a source and

even perform some processing on the data before passing it to the parent dataflow.

You can create a subflow that is as simple as a single source stage that is

configured in a way that you want to reuse in multiple dataflows, or you could

create a more complex subflow that reads data and then processes it in some way

before passing it to the parent dataflow.

-

In Enterprise Designer, click .

-

Drag the appropriate data source from the palette onto the canvas and configure

it.

For example, if you want the subflow to read data from a comma-separated

file, you would drag a Read from File stage onto the canvas.

-

If you want the subflow to process the data in some way before sending it to

the parent dataflow, add additional stages as needed to perform the

preprocessing you want.

-

At the end of the dataflow, add an Output stage and configure it.

This allows the data from the subflow to be sent to the parent dataflow.

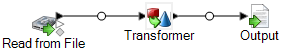

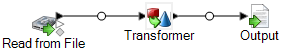

For example, if you created a subflow that reads data from a file then uses a

Transformer stage to trim white space and standardize the casing of a field,

you would have a subflow that looks like this:

-

Double-click the Output stage and select the fields you want to pass into the

parent dataflow.

-

Select and save the subflow.

-

Select to make the subflow available to include in dataflows.

-

In the dataflow where you want to include the subflow, drag the subflow from

the palette onto the canvas.

-

Connect the subflow to the dataflow stage you want.

Note: Since the subflow contains a source stage rather than an Input stage, the

subflow icon only has an output port. It can only be used as a source in the

dataflow.

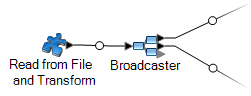

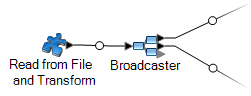

The parent dataflow now uses the subflow you created as input. For example,

if you created a subflow named "Read from File and Transform" and you add

the subflow and connect it to a Broadcaster stage, your dataflow would look

like this: