After you have populated the dimension tables in your data warehouse, you are ready

to populate the fact table. You populate a fact table with numeric measurements from

tables in the OLTP database.

Important: You must populate the dimension tables before populating the fact table.

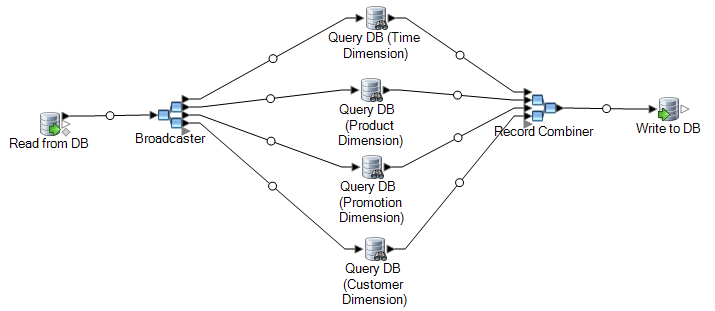

The following procedure describes how to use Spectrum™ Technology Platform to populate a fact table in your data warehouse. In this procedure, you will create a dataflow reads in source data from a table in your source database, replaces natural keys from the source tables with surrogate keys from the dimension tables, then loads the updated record containing the surrogate keys and the fact data from source tables into the fact table.

-

In Management Console, create connections to your data source and the data warehouse.

-

In Enterprise Designer, select .

-

Based on the source of the data you want to write to the fact table, drag the appropriate stage onto the canvas.

- To use data from a database to populate the table, drag the Read from DB stage onto the canvas.

- To use data from a flat file to populate the table, drag the Read from File stage onto the canvas.

- To use data from a variable format file to populate the table, drag the Read from Variable Format File stage onto the canvas.

- To use data from an XML file to populate the table, drag the Read from XML stage onto the canvas.

-

Double-click the source stage you just placed on the canvas and configure it to

point to the source of the data you want to populate to the fact table.

Note: Typically, a dataflow that populates a fact table reads data from a database as opposed to a file. Because this is the most common scenario, the examples in the rest of this procedure use Read from DB.

-

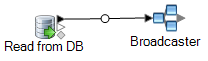

Drag a Broadcaster stage onto the canvas and connect the source stage to it.

Your dataflow now looks like this:

-

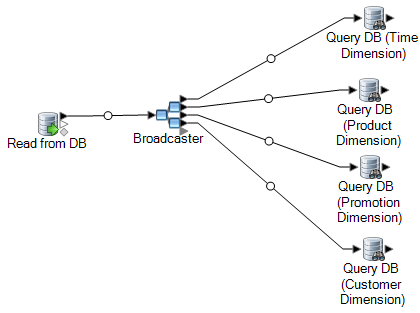

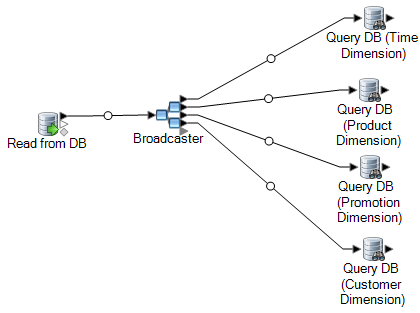

Drag one Query DB stage onto the canvas for each dimension table in your data warehouse and connect them to the Broadcaster stage.

For example, if you have four dimension tables in your data warehouse, drag

four Query DB stages onto the canvas. Your dataflow would look like

this:

The Query DB stages will be used to look up the surrogate key for each dimension using the natural key from the data source. The surrogate key will then replace the natural in each record being loaded into the fact table.

Tip: You can modify the name of the stage to make it easy to see which table each stage queries.

-

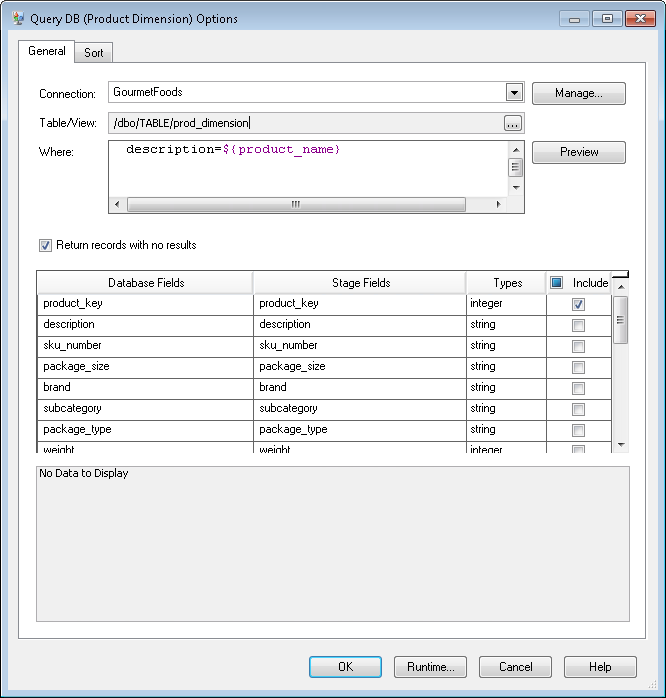

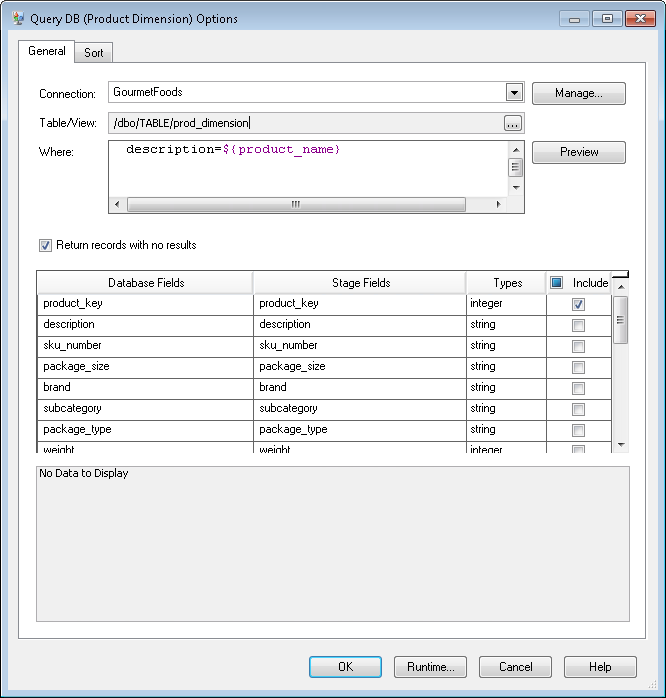

Configure each Query DB stage so that it looks up the surrogate key for each natural key from the data source. To do this:

-

In the Connection field, specify the connection to the data warehouse.

-

In the Table/View field, select the dimension table that you want this stage to query.

-

In the Where field, write a WHERE statement that looks up the surrogate key based on the value in the appropriate dataflow field.

For example, this would look up the surrogate key for a product by finding the record in the dimension table whose value in the description column matches the value in the data source's product_name field.

description=${product_name}

-

In the Include column select the database column that contains the surrogate key.

For example, a Query DB stage that looks up the surrogate key for a product name would look like this:

In this example, the query looks up the product key by finding the record in the prod_dimension table where the value in the description column matches the value in the dataflow field product_name. The stage returns the product_key field from the table and adds it to the dataflow, as indicated by the checked box in the Include column.

-

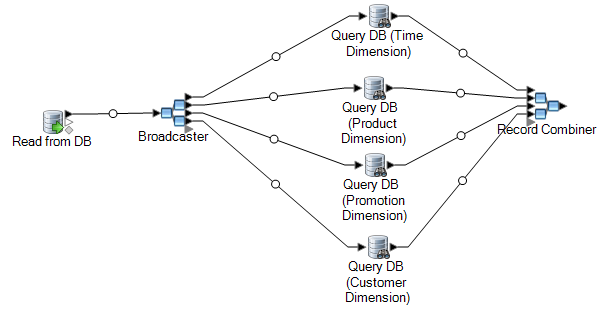

Drag a Record Combiner stage to the canvas and connect all the Query DB stages to it.

Your dataflow should now look like this:

-

Drag a Write to DB stage onto the canvas and connect it to the Record Combiner stage.

Your dataflow should now look like this:

-

Configure the Write to DB stage to write the records to the fact table. To do this:

-

In the Connection field, specify the connection to the data warehouse.

-

In the Table/View field, select the fact table that you want this stage to query. If the fact table does not already exist in the data warehouse, click Create Table to create the fact table in the data warehouse.

-

For each field that you want to write to the fact table, check the box in the Include column.

-

On the Runtime tab, notice that by default the Insert option is selected for the write mode. Typically fact table population is run in insert mode, so you can leave this option selected.

-

Save and run your dataflow.

Example of Replacing Source Data with Keys from the Dimension Table

Consider this record:

March 28 2013,Parsley Garlic Pasta,Mile High Gourmet Market,78.35

In this example, there is a date field, followed by a product name (Parsley Garlic Pasta), a customer (Mile High Gourmet Market) and an amount (78.25). The data warehouse has dimension tables for the date, product name, and customer, so the natural keys in the record need to be replaced with the surrogate keys from the dimension tables. To accomplish this, the dataflow would have three Query DB stages, one that looks up the surrogate key for the date, one that looks up the surrogate key for the product name, and one that looks up the surrogate key for the customer.

Each Query DB would have a WHERE statement that looks up the surrogate key.

As a result of these lookups, the record might look like this when written to the fact table:

711,1,15,78.35

Notice that the natural keys for date, product name, and customer have been replaced with surrogate keys.