Database Pool Size and Runtime Instances

In most Spectrum™ Technology Platform environments there are multiple flows running at the same time, whether they are batch jobs or services responding to web service or API requests. To optimize concurrent processing, you can use the database pool size setting, which limits the number of concurrent requests a Spectrum database handles, and runtime instances, which controls the number of instances of a flow stage that run concurrently. These two settings should be tuned together to achieve optimal performance.

Database Pool Size

Spectrum databases contain reference data used by certain stages, such as postal data used to validate addresses, or geocoding data used to geocode addresses. These databases can be configured to accept multiple concurrent requests from the dataflow stages or services that use them, thereby improving the performance of the dataflows or service requests. The database pool size sets the maximum number of concurrent requests that a Spectrum database will process. By default, Spectrum databases have a pool size of 4, meaning the database can process four requests simultaneously.

The optimal pool size varies by module. You will generally see the best results by setting the pool size between one-half to twice the number of CPUs on the server, with the optimal pool size for most modules being the same as the number of CPUs. For example, if your server has four CPUs you may want to experiment with a pool size between 2 (one-half the number of CPUs) and 8 (twice the number of CPUs) with the optimal size possibly being 4 (the number of CPUs).

When modifying the pool size you must also consider the number of runtime instances specified in the dataflow for the stages accessing the database. Consider for example a dataflow that has a Geocode US Address stage that is configured to use one runtime instance. If you set the pool size for the US geocoding database to four, you will not see a performance improvement because there would be only one runtime instance and therefore there would only be one request at a time to the database. However, if you were to increase the number of runtime instances of Geocode US Address to four, you might then see an improvement in performance since there would be four instances of Geocode US Address accessing the database simultaneously, therefore using the full pool.

Runtime Instances

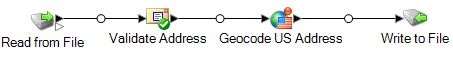

Each stage in a dataflow operates asynchronously in its own thread and is independent of any other stage. This provides for parallel processing of stages in a dataflow, allowing you to utilize more than one runtime instance for a stage. This is useful in dataflows where some stages process data faster than others. This can lead to an unbalanced distribution of work among the threads. For example, consider a dataflow consisting of the following stages:

Depending on the configuration of the stages, it may be that the Validate Address stage processes records faster than the Geocode US Address stage. If this is the case, at some point during the execution of the dataflow all the records will have been processed by Validate Address, but Geocode US Address will still have records to process. In order to improve performance of this dataflow, it is necessary to improve the performance of the slowest stage - in this case Geocode US Address. One way to do that is to specify multiple runtime instances of the stage. Setting the number of runtime instances to two, for example, means that there will be two instances of that stage, each running in its own thread, available to process records.

As a general rule, the number of runtime instances should be at least equal to the number of instances of the remote component. See the Administration Guide for information about remote components. While specifying multiple runtime instances can help improve performance, setting this value too high can strain your system resources, resulting in decreased performance.

Tuning Procedure

Finding the right settings for database pool size and runtime instances is a matter of experimenting with different settings to find the ones maximize available server resources without overloading resources and causing reduced performance.

- Begin by finding sample data to use as you test different settings. The sample dataset should be large enough that execution time is measurable and can be validated for consistency. The sample data should also be representative of the actual data you want to process. For example, if you are doing performance testing for geocoding, be sure that your test data has an equal number of records for all the countries you intend to geocode.

- If you are testing a service or dataflow that requires the use of a database resource, such as postal databases or geocoding databases, make sure that you have the latest version of the database installed.

- With sample data ready and the latest database resources installed, create a simple dataflow that reads data from a file, processes it with the stage you want to optimize, and writes to a file. For example, if you want to test performance settings for Validate Address, create a dataflow consisting of Read from File, Validate Address, and Write to File.

- Set the database resource pool size to 1:

- Open Management Console.

- Go to .

- Select the database resource you want to optimize and click the Modify button

.

. - In the Pool size field, specify 1.

- Click OK.

- Set the stage's runtime instances to 1:

- Open the dataflow in Enterprise Designer.

- Double-click the stage that you want to set to use multiple runtime instances.

- Click Runtime.Note: Not all stages are capable of using multiple runtime instances. If there is no Runtime button at the bottom of the stage's window, the stage is not capable of using multiple runtime instances.

- Select Local and specify 1.

- Click OK to close the Runtime Performance window, then click OK to close the stage.

- Calculate baseline performance by running the dataflow several times and recording the

average values for:

- Elapsed time

- CPU utilization

- Memory utilization

Tip: You can use the JMX console to monitor performance. For more information, see Monitoring Performance with the JMX Console. - Run multiple instances of the job concurrently, if this is a use case that must be

supported. Record elapsed time, CPU utilization, and memory utilization for each scenario.Tip: You can use a file monitor to run multiple instances of a job at once. For more information, see Triggering a Flow with a Control File.

- Increment the database resource pool size and the stage runtime instances setting.

- Restart the server.

- Run the dataflow again, recording the elapsed time, CPU utilization, and memory utilization.

- Continue to increment the database resource pool size and the stage runtime instances until you begin to see diminishing performance.

- If you are testing geocoding performance, repeat this procedure using single country and multi-country input.