iemodel evaluate model

The iemodel evaluate command evaluates a Spectrum Information Extraction model that has previously been trained.

Usage

iemodel evaluate model --n modelName --t testFileName --o outputFileName --c categoryCount --d trueOrfalse| Required | Argument | Description |

|---|---|---|

| Yes | --n modelName | Specifies the name and location of the model you want to evaluate. Directory paths you specify here are relative to the location where you are running the Administration Utility. |

| Yes | --t testFileName | Specifies the name and location of the test file used to evaluate the model. |

| No | --o outputFileName | Specifies the name and location of the output file that will store the evaluation results. |

| No | --c categoryCount | Specifies the number of categories in the model; must be a numeric value. Note: It

is applicable only for Text Classification model. |

| No | --d trueOrfalse | Specifies whether to display a table with entity wise detailed analysis; the value

must be true or false, as below:

false. The Model Evaluation Results table, and Confusion Matrix with its columns, as described below, display the counts for each entity. Note: If the command is run without this

argument or with the argument value false, the Model

Evaluation Results table and Confusion Matrix are not displayed. Only

the Model Evaluation Statistics are displayed. |

Output

- Model Evaluation Statistics

- Executing this command displays these evaluation statistics in a tabular format:

- Precision: It is a measure of exactness. Precision defines the proportion of correctly identified tuples.

- Recall: It is a measure of completeness of the results. Recall can be defined as a fraction of relevant instances that are retrieved.

- F1 Measure: It is the measure of the accuracy of a test. The computation of F1 score takes into account both precision and recall of the test. It can be interpreted as the weighted average of the precision and recall, where F1 score reaches its best value at 1 and worst at 0.

- Accuracy: It measures the degree of correctness of results. It defines the closeness of the measured value to the known value.

- Model Evaluation Results

- If the command is run with the argument

--d true, the match counts of all the entities are displayed in a tabular format. The columns of the table are:- Input Count

- The number of occurrences of the entity in the input data.

- Mismatch Count

- The number of times the entity match failed.

- Match Count

- The number of times the entity match succeeded.

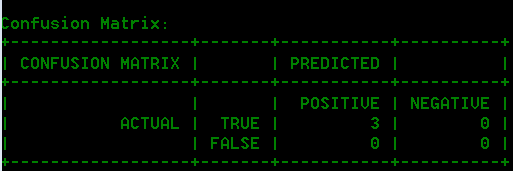

- Confusion Matrix

- The Confusion Matrix (shown below) allows visualization of how an algorithm performs. It illustrates the performance of a classification model.

Example

This example:

iemodel evaluate model --n MyModel --t

C:\Spectrum\IEModels\ModelTestFile --o C:\Spectrum\IEModels\MyModelTestOutput --c 4 --d

true- Evaluates the model called "MyModel"

- Uses a test file called "ModelTestFile" in the same location

- Stores the output of the evaluation in a file called "MyModelTestOutput"

- Specifies a category count of 4

- Specifies that a detailed analysis of the evaluation is required