Designing a Dataflow to Handle Exceptions

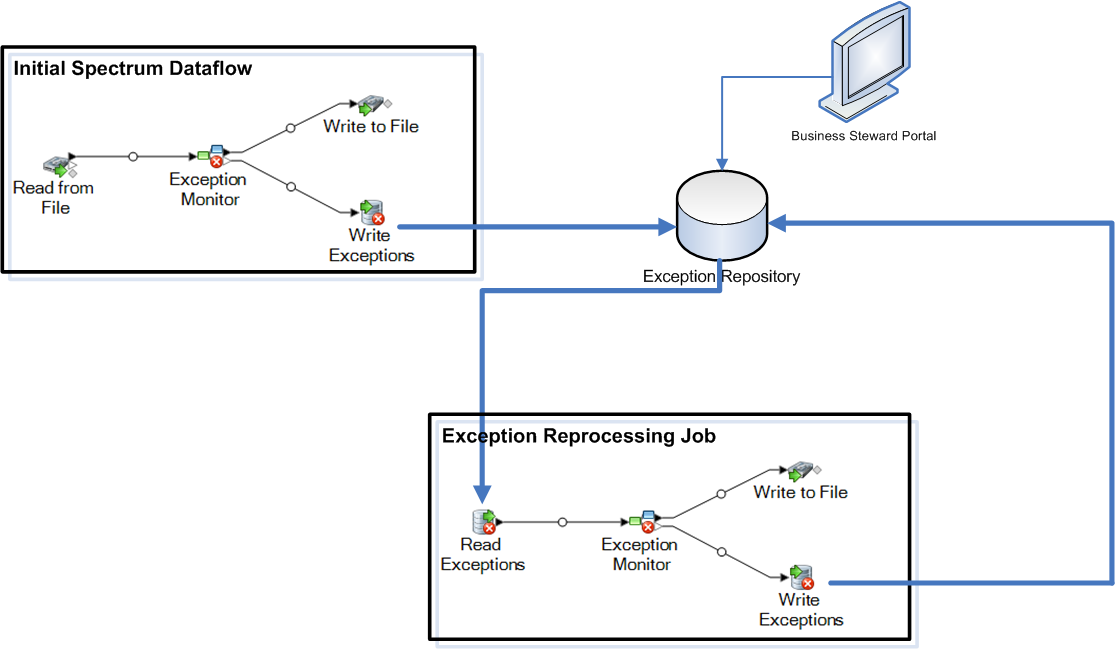

- An initial dataflow that performs a data quality process, such as record deduplication, address validation, or geocoding.

- An Exception Monitor stage that identifies records that could not be processed.

- A Write Exceptions stage that takes the exception records identified by the Exception Monitor stage and writes them to the exception repository for manual review.

- The Data Stewardship Portal, a browser-based tool, which allows you to review and edit exception records. After records are edited, they are resolved and reprocessed.

- An exception reprocessing job that uses the Read Exceptions stage to read approved records from the exception repository into the job. The job then attempts to reprocess the corrected records, typically using the same logic as the original dataflow. The Exception Monitor stage once again checks for exceptions. The Write Exceptions stage then sends exceptions back to the exception repository for additional review.

Here is an example scenario that helps illustrate a basic exception management implementation:

In this example, there are two dataflows: the initial dataflow, which evaluates the input records' postal code data, and the exception reprocessing job, which takes the edited exceptions and verifies that the records now contain valid postal code data.

In both dataflows there is an Exception Monitor stage. This stage contains the conditions you

want to use to determine if a record should be routed for manual review. These

conditions consist of one or more expressions, such as PostalCode is

empty, which means any record not containing a postal code would be

considered an exception and would be routed to the Write Exceptions stage and

written to the exception repository. For more information, see Exception Monitor.

Any records that the Exception Monitor identifies as exceptions are routed to an exception repository using the Write Exceptions stage. Data stewards review the exceptions in the repository using the Data Stewardship Portal, a browser-based tool for viewing and modifying exception records. Using our example, the data steward could use the Data Stewardship Portal Editor to manually add postal codes to the exception records and mark them as "Approved".

Once a record is marked resolved in the Data Stewardship Portal, the record is available to be read back into a Spectrum Technology Platform dataflow. This is accomplished by using a Read Exceptions stage. Should any records still result in an exception, they are again written to the exception repository for review by a data steward.

- How do you want to identify exception records? The Exception Monitor stage can evaluate any field's value or any combination of fields to determine if a record is an exception. You should analyze the results you are currently getting with your dataflow to determine how you want to identify exceptions. You may want to identify records in the middle range of the data quality continuum, and not those that were clearly validated or clearly failed.

- Do you want edited and approved exception records re-processed using the same logic as was used in the original dataflow? If so you may want to use a subflow to create reusable business logic. For example, the subflow could be used in an initial dataflow that performs address validation and in an exception reprocessing job that re-processes the corrected records to verify the corrections. You can then use different source and sink stages between the two. The initial dataflow might contain a Read from DB stage that takes data from your customer database for processing. The exception reprocessing job would contain a Read Exceptions stage that takes the edited and approved exception records from the exception repository.

- Do you want to reprocess corrected and approved exceptions on a predefined schedule? If so you can schedule your reprocessing job using Scheduling in the Management Console.